The Evolution of Content Measurement in the Era of Artificial Intelligence

The advent of artificial intelligence has fundamentally reshaped the content landscape, marking a decisive shift from manual efforts to AI-augmented workflows. This evolution demands a re-evaluation of how we measure success. For instance, a marketing team using AI to scale content production rapidly faces the specific challenge of ensuring quality at speed.

Traditional metrics, such as simple pageviews or basic traffic numbers, are increasingly becoming secondary indicators. Field observations suggest these offer an incomplete picture of AI's true impact on content strategy and business outcomes.

To truly gauge the ROI of AI initiatives, we must define AI content KPIs that bridge the gap between operational efficiency and tangible business value. These new metrics encompass not just reach, but also productivity value metrics like content velocity and resource optimization, alongside adoption rates reflecting internal team integration. This shift helps marketing leaders understand AI's direct contribution to strategic goals. For a comprehensive overview, see E-E-A-T and AI content measurement.

Why Traditional Metrics Fail to Capture the Full Value of AI Content

The proliferation of AI in content creation presents a critical challenge to conventional measurement paradigms. The sheer volume of AI-generated content can create a 'volume trap', where an abundance of articles, posts, or summaries does not automatically equate to success. Chasing quantity without a strategic purpose often dilutes brand authority and overwhelms the audience rather than engaging them meaningfully.

Traditional metrics like pageviews or clicks barely scratch the surface of AI's impact. They fail to capture engagement depth and utility—whether the content truly answers user queries, solves problems, or guides them through a customer journey. A common mistake is celebrating a 300% increase in content output when conversion rates remain stagnant, indicating a failure to measure true content utility beyond surface-level interactions. This is why specialized AI content KPIs are necessary to track meaningful progress.

Furthermore, relying solely on computation-based model quality scores, such as perplexity or coherence, can be misleading. While these internal AI metrics are vital for development, they do not inherently translate into tangible business outcomes like lead generation, customer retention, or brand sentiment. While many believe that higher traffic is always better, a smaller, highly engaged audience driven by genuinely useful content often yields superior ROI, making deeper engagement metrics paramount.

A Comprehensive Framework for Tracking AI Content Performance

Moving beyond the limitations of traditional metrics, successful AI integration demands a sophisticated measurement framework. This framework must encompass output volume, tangible business value, efficiency gains, and the strategic impact derived from AI-powered initiatives. A holistic approach that balances internal operational metrics with external content performance is crucial for demonstrating true ROI and fostering continuous improvement.

To effectively track and optimize performance, organizations should implement a multi-faceted approach. This involves establishing clear AI content KPIs across various dimensions, ensuring that every piece of AI-generated content contributes meaningfully to strategic objectives.

The 5 Pillars of AI Content Performance

Here is a comprehensive framework designed to capture the full spectrum of AI content's value:

-

Productivity & Efficiency: These metrics quantify the operational benefits of AI content generation.

- Time to Market (TTM): Measure the time elapsed from content brief inception to publication. AI tools significantly reduce this cycle; tracking the reduction in TTM for AI-assisted content versus human-only content directly demonstrates efficiency gains.

- Cost Per Asset Reduction: Calculate the average cost (inclusive of human hours, software licenses, and revision cycles) per asset. A demonstrable decrease in this metric highlights significant cost savings. Practical experience shows that even partial automation can yield substantial savings in production budgets.

-

Quality & Trust: While AI accelerates creation, maintaining high standards is paramount.

- Revision Rates: Monitor the number and depth of human revisions required for AI-generated drafts. A declining revision rate over time suggests improved AI output quality and better prompt engineering.

- Factual Accuracy Score: Implement a systematic review process to assess the factual correctness of AI-generated information. This can involve human verification or integration with fact-checking APIs.

- Brand Voice Alignment: Develop a scoring system, potentially AI-assisted, to evaluate how well content adheres to established brand guidelines, tone, and style. Inconsistent brand voice can erode audience trust, making this a critical KPI.

-

Adoption Metrics: These KPIs measure the internal utilization and acceptance of AI tools within content teams.

- User Adoption Rate: Track the percentage of eligible content creators or marketers actively using AI tools. Low adoption can indicate usability issues or a lack of perceived value.

- Usage Frequency & Depth: Monitor how often teams interact with AI tools and the complexity of tasks assigned. Higher frequency and more sophisticated usage suggest successful integration into workflows. Experts note that robust training and clear use-case demonstrations are key drivers for increasing adoption.

-

Impact & Utility: Beyond basic engagement, these metrics assess the true value and depth of the content.

- Content Depth & Comprehensiveness: Evaluate if AI-generated content provides sufficient detail and covers relevant sub-topics, moving beyond superficial summaries. This can be qualitative or based on keyword coverage and entity recognition.

- Content Utility Score: Develop a proprietary score combining metrics like time on page for specific sections, scroll depth, interaction with embedded elements (e.g., calculators, interactive diagrams), and explicit user feedback. This measures how well the content addresses user needs.

-

Holistic ROI Calculation: Synthesizing the above, this pillar provides a complete financial picture.

- Value of Output vs. Cost: Balance the direct cost of AI tools and human oversight against the quantifiable value of the output (e.g., reduced production costs, increased lead generation, or faster campaign launches).

- Human Hours Saved & Reallocated: Quantify the human effort saved by AI automation and track how those hours are reallocated to higher-value, strategic tasks like complex ideation or deep analysis. This demonstrates strategic uplift rather than just efficiency.

Pro Tip: To accurately attribute ROI, establish clear baseline metrics before AI implementation. This allows for a direct comparison and a more robust demonstration of the AI initiative's impact on both the bottom line and strategic resource allocation.

Optimizing for Extractable Content and Machine Readability

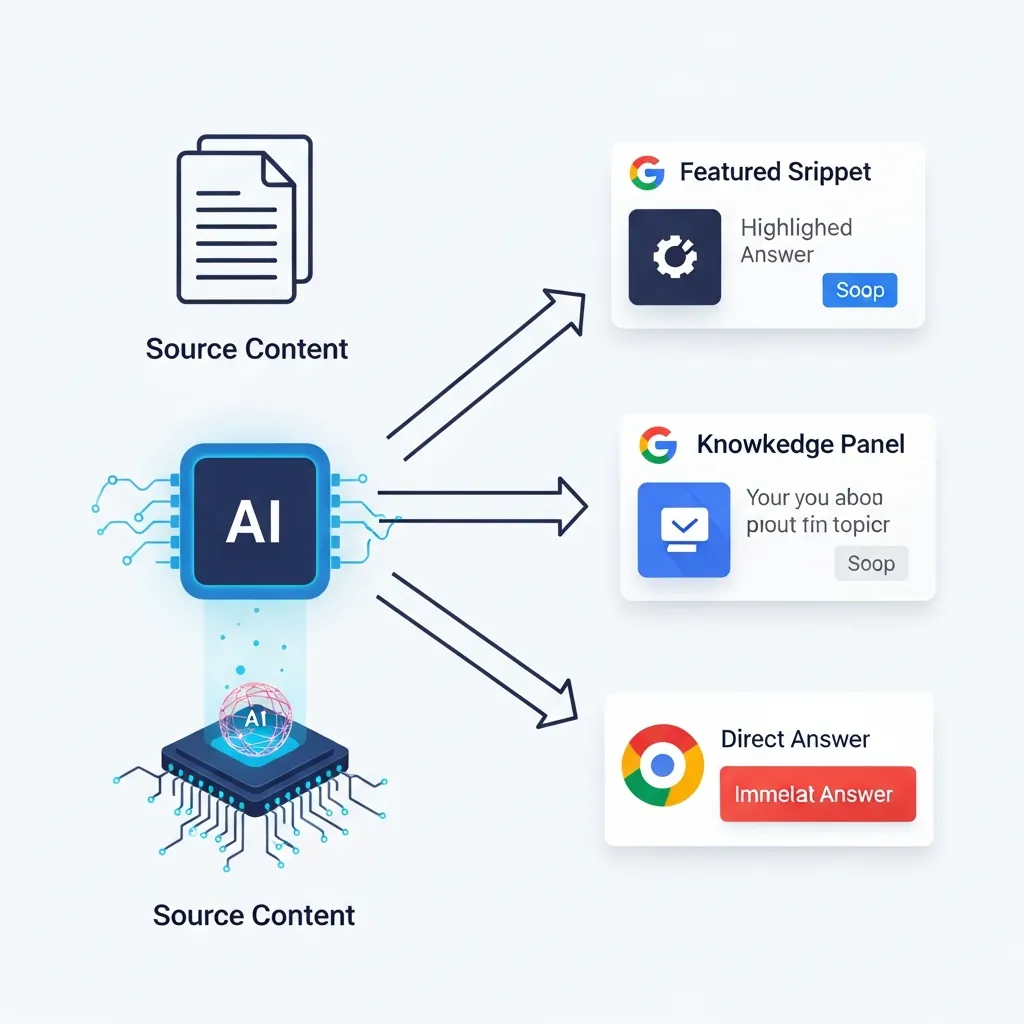

The rise of AI-driven search has fundamentally reshaped content consumption, ushering in an era of 'Zero-Click' content where users often find answers directly in search results. For content to deliver business value, it must be optimized for machine readability and easy extraction by AI systems.

Key Performance Indicators for extractable content are crucial. These include the Featured Snippet Rate, indicating how often your content provides direct answers, and Structured Data Performance, tracking the successful parsing of your schema markup. Measuring the visibility of your content for short-form answers and its inclusion in knowledge panels directly reflects your content's extractability. A common mistake is producing rich information with poorly implemented structured data, leading to a low featured snippet rate despite high relevance. This directly impacts the content's productivity value.

Measuring content 'readability' now extends beyond human comprehension to include Large Language Models (LLMs). While traditional metrics like Flesch-Kincaid remain vital, we must also assess semantic cohesion and entity recognition for LLMs. This ensures AI can accurately interpret, summarize, and utilize your information. Prioritizing structured data and clear, concise language is paramount for future-proofing content against evolving AI algorithms, enhancing its adoption rate by AI systems.

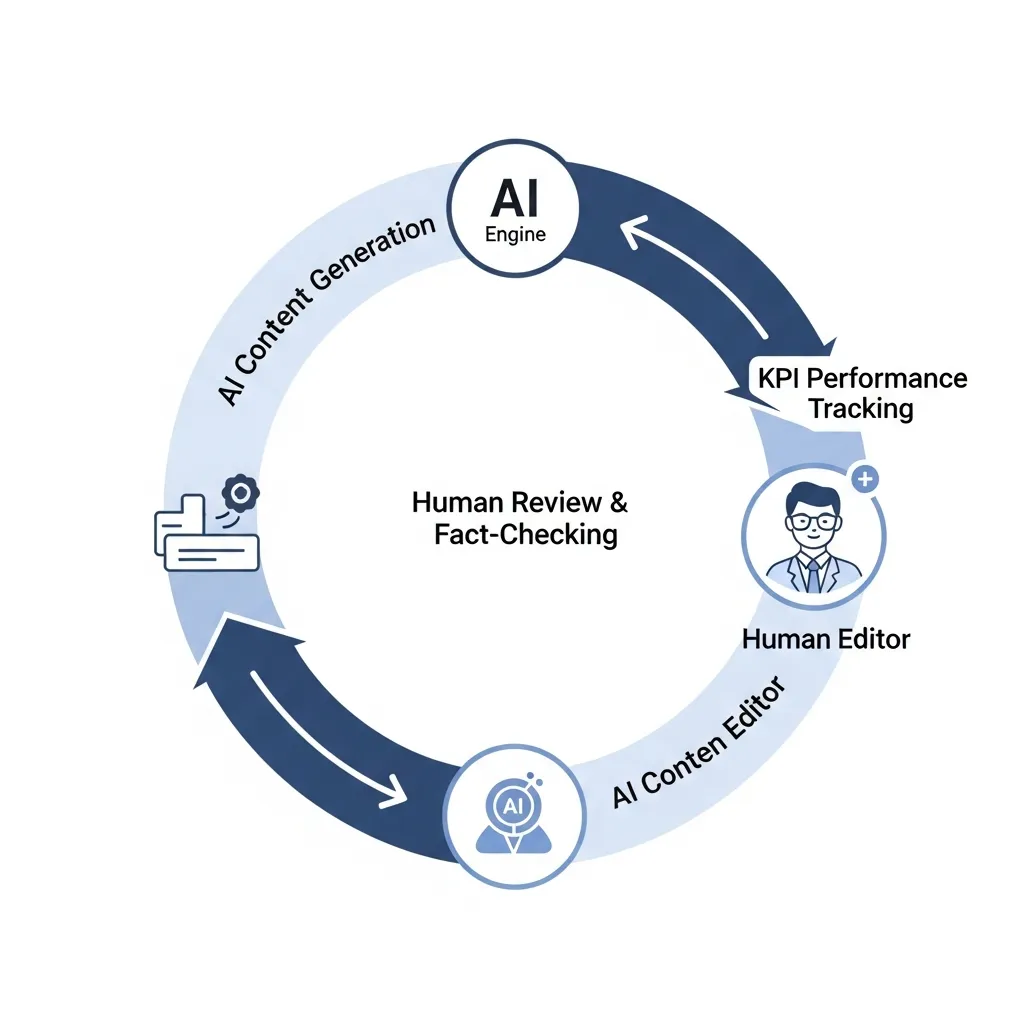

The Vital Role of Human Oversight and Ethical Auditing

While AI accelerates content creation, human oversight remains paramount for quality and ethical integrity. Tracking the Human-in-the-Loop (HITL) intervention rate—the percentage of AI outputs requiring significant editorial refinement—provides a tangible metric. For instance, reducing this rate from 40% to 15% not only boosts productivity but also signals improved AI alignment with brand voice and factual accuracy. This metric helps identify areas where AI models need further training or prompt refinement.

Crucially, human auditors are vital for identifying and mitigating bias in AI-generated outputs. Unchecked AI can perpetuate stereotypes, generate inaccurate information, or adopt an inappropriate tone, risking brand reputation. Relying solely on AI without robust human checks often leads to content that lacks nuance and struggles to maintain long-term organic visibility.

Ultimately, there is a clear correlation between human editing and long-term search rankings. While AI can draft quickly, human editors infuse the content with E-E-A-T signals—Expertise, Experience, Authoritativeness, and Trustworthiness—that search engines prioritize. The most effective approach is to view AI as an accelerator, not a replacement, for human expertise, ensuring content resonates authentically and performs sustainably.

Future-Proofing Your AI Content Measurement Strategy

The future of AI content measurement demands a definitive shift from volume to intrinsic value. As AI models rapidly evolve, so too must our metrics. Iterative KPI adjustment is paramount; setting static KPIs for dynamic AI deployments can misrepresent true impact. For instance, without adapting, a team might overlook a 15% increase in content relevance driven by a new AI iteration.

The most effective strategy involves continuous refinement, aligning metrics like adoption rates and productivity value with evolving business objectives. Ultimately, success is not just about automation efficiency; it is about artfully balancing AI's capabilities with authentic brand storytelling. This human-AI synergy ensures content resonates deeply, fostering genuine audience connection. To initiate this shift, begin by auditing your current AI content KPIs against business value and adoption metrics today.

Frequently Asked Questions

What are AI content KPIs?

AI content KPIs are specific metrics used to measure the efficiency, quality, and business impact of content generated or assisted by artificial intelligence, such as content velocity and revision rates.

Why are traditional metrics insufficient for AI content?

Traditional metrics like pageviews often fail to capture the operational efficiency, brand alignment, and factual accuracy improvements that AI brings to a content strategy.

How do you measure the ROI of AI content?

ROI is measured by balancing the cost of AI tools and human oversight against the quantifiable value of the output, such as human hours saved and improved lead generation.

What is the Human-in-the-Loop (HITL) intervention rate?

This KPI measures the percentage of AI-generated content that requires significant human editing, helping teams track the improvement of AI alignment over time.