The Evolution of Search: Understanding Generative Results

The search landscape is undergoing a profound transformation. AI Overviews now deliver synthesized, direct answers at the top of the Search Engine Results Page (SERP), moving beyond traditional lists of links. Advanced algorithms process data to construct concise summaries, often answering user queries without requiring further navigation.

This pivot creates a critical challenge for content creators: zero-click searches. When answers are provided instantly, the incentive for users to click through to a website diminishes, impacting organic traffic and brand visibility. For example, a complete step-by-step repair guide might now appear directly on the SERP.

This shift requires a proactive ai overviews strategy to focus on:

- Maintaining organic visibility in a generative environment

- Maximizing content utility within AI-generated answers

- Securing a consistent brand presence across all search features

To delve deeper into securing authority in this new era, explore AI Overviews.

How AI Overviews Process and Synthesize Information

At the core of AI Overviews are Large Language Models (LLMs), which serve as the generative engine responsible for interpreting complex queries and formulating natural language responses. These powerful models do not merely retrieve existing answers; they synthesize new ones based on vast datasets to provide direct, comprehensive replies.

However, LLMs can occasionally "hallucinate" or invent information. This is where Retrieval-Augmented Generation (RAG) becomes indispensable. RAG systems first retrieve highly relevant information from a vast index of web content—similar to traditional search retrieval. Subsequently, the LLM synthesizes this retrieved data to craft a concise answer, effectively grounding the generative process in factual, external sources.

For content to be selected and cited within these generative answers, the algorithm employs sophisticated criteria. Field observations indicate a strong emphasis on sources demonstrating high topical authority, robust E-E-A-T (Experience, Expertise, Authoritativeness, Trustworthiness) signals, and direct relevance to the user's query. Technical data suggests that pages offering well-structured, factual, and unique insights from reputable domains are prioritized as foundational references for AI synthesis.

The Core Framework for Generative Engine Optimization (GEO)

The emergence of AI Overviews necessitates a paradigm shift from traditional SEO to Generative Engine Optimization (GEO). Implementing a comprehensive ai overviews strategy necessitates a paradigm shift toward optimizing content for the parsing and synthesis capabilities of LLMs, ensuring information is easy for AI to understand, extract, and incorporate into responses.

Strategic Content Structuring for AI Parsing

Effective GEO begins with intentional content structure. While H-tags are vital for human readability, they are amplified for AI Overviews. LLMs leverage headings (H1, H2, H3) to discern hierarchy, identify sub-topics, and understand relationships. Clear, semantically relevant H-tags guide AI through complex articles for accurate information retrieval.

Bulleted summaries and lists are equally indispensable. AI thrives on structured data. Presenting key takeaways, definitions, or steps in concise bullet points significantly reduces the "cognitive load" for an LLM. This format allows AI to quickly identify core concepts, making them prime candidates for inclusion in an AI Overview.

The 'Direct Answer' Method: Crafting for LLM Extraction

To cater directly to generative results, adopt the 'Direct Answer' method. This involves placing concise, unambiguous definitions and immediate answers to common queries at the start of relevant sections. Think of it as pre-packaging the snippets an LLM seeks. Explicitly state "X is defined as…" or "The primary function of Y involves…" followed by a succinct explanation. These direct answers (typically 1–3 sentences) are highly conducive to LLM extraction.

Implementing the 'Inverted Pyramid' for AI-Friendly Hierarchies

The journalistic 'Inverted Pyramid' style is a fundamental GEO principle. It dictates that the most critical information (who, what, when, where, and why) be presented at the very beginning of an article, followed by progressively less crucial details. For AI Overviews, this ensures the LLM quickly grasps the core message without extensive parsing. Content that "buries the lead" forces more AI processing, which may lead to less accurate extractions.

Enhancing Credibility with Niche Citations and Statistics

Source trustworthiness is paramount, driven by Google's E-E-A-T guidelines. To bolster credibility for LLMs, niche citations and statistics are vital. Citing authoritative, domain-specific sources signals expertise. For instance, medical research citing a specific peer-reviewed journal carries more weight. Similarly, substantiating claims with current, verifiable statistics from reputable organizations increases perceived accuracy. LLMs prioritize well-supported information, making strategic citation a core GEO tactic.

Tailoring Language for Natural Language Processing (NLP) Patterns

GEO demands a deliberate focus on tailoring language for Natural Language Processing (NLP) patterns. Write in a clear, concise, and natural style that mirrors both user queries and AI responses. Avoid overly complex sentences or jargon unless it is immediately defined. The goal is semantically rich, unambiguous language that makes it easier for LLMs to identify entities and extract intent. Focus on semantic keywords and related terms naturally to improve the AI's understanding of the broader context.

The GEO Content Synthesis Protocol

To systematically implement these strategies, consider the following protocol:

- Semantic Structuring: Utilize a logical H1-H3 hierarchy and leverage bulleted lists for key takeaways.

- Direct Answer Integration: Identify core user questions and craft concise (1–3 sentence) answers immediately following relevant headings.

- Inverted Pyramid Application: Place the most critical information at the beginning of the article and its sections.

- Credibility Reinforcement: Integrate niche-specific citations and substantiate claims with current, verifiable statistics.

- NLP Optimization: Write clear, natural content and employ semantic keywords to address user intent directly.

This protocol provides a robust framework for adapting content creation to generative search, ensuring your expertise is effectively synthesized.

Leveraging Structured Data and Technical SEO for AI Parsing

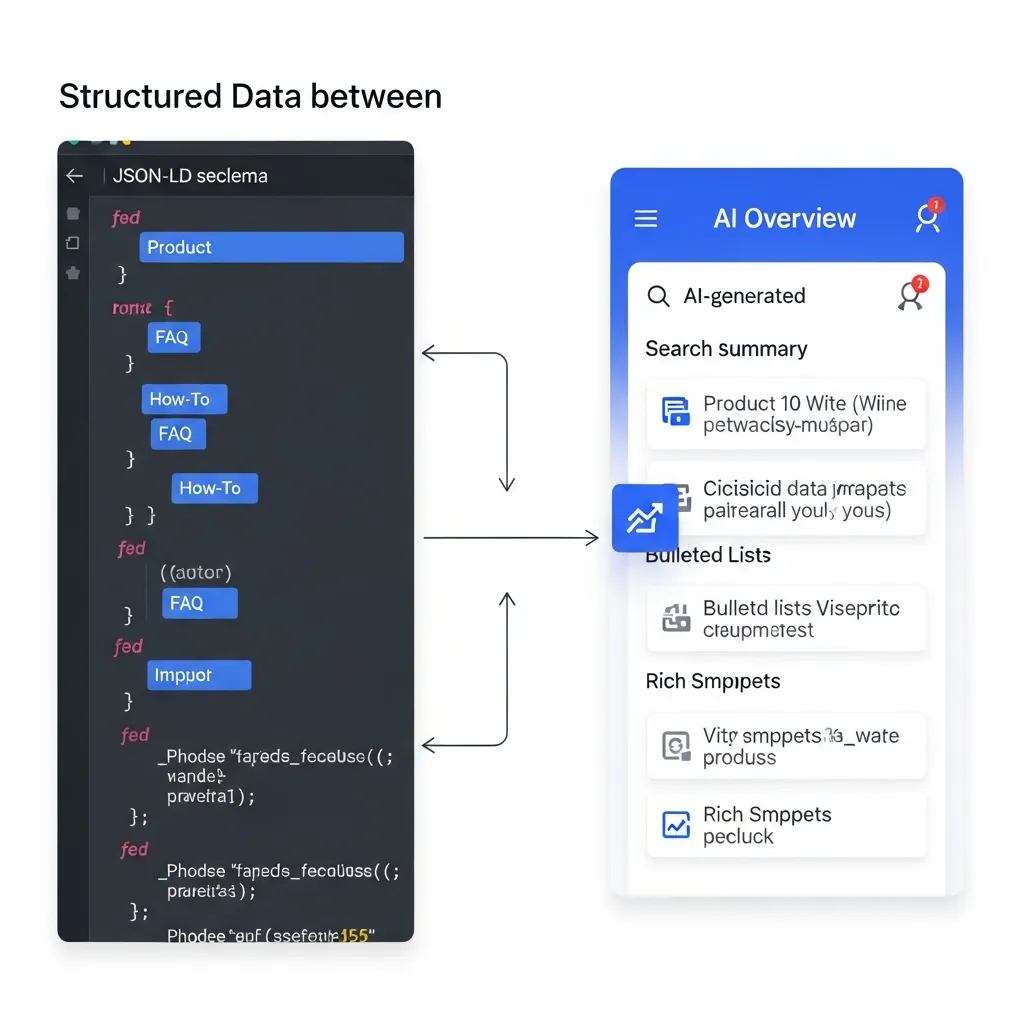

Beyond content structuring, structured data is paramount for explicit communication with AI models. Implementing Schema.org markup provides a machine-readable layer that informs AI about the nature of your content. Field observations indicate that FAQPage, HowTo, and Product schema are particularly potent for AI Overviews. These markups pre-package information into digestible formats, enabling AI to quickly extract answers or specifications without ambiguity.

For multi-modal and voice search environments, Speakable schema offers a strategic advantage. This markup explicitly designates content sections suitable for audio synthesis, directly influencing how AI Overviews present information in voice-activated contexts. Content optimized with Speakable schema is more likely to be read aloud by virtual assistants, expanding visibility into a critical search segment.

Concurrently, foundational technical SEO elements remain critical. Site speed and mobile-friendliness are not merely user experience factors; they are essential signals that impact AI parsing efficiency. A fast, responsive site ensures AI crawlers can access and process information swiftly. Technical data suggests that slow loading times can significantly hinder content discovery and indexing, regardless of its semantic quality.

Building Topical Authority and E-E-A-T for Generative Models

Generative models heavily weigh content quality signals, making Experience, Expertise, Authoritativeness, and Trustworthiness (E-E-A-T) paramount. AI-powered summaries prioritize sources demonstrating genuine practical insight. Practical experience shows that content featuring direct, first-hand accounts or specialized professional understanding gains a significant edge, as AI identifies these as the most credible voices.

To cultivate this authority, developing comprehensive topic clusters is essential. Instead of isolated articles, organizations should create interconnected content ecosystems that thoroughly explore a subject. This signals to generative models a deep, holistic command over a domain. Sites with robust internal linking and extensive coverage across related sub-topics are more frequently cited by AI.

Furthermore, managing digital PR and brand mentions strategically influences an AI's perception of your credibility. Generative models continuously scan for external validation—reputable backlinks, industry awards, and positive brand mentions across authoritative platforms. This external recognition acts as a powerful trust signal, enhancing your site's standing in the AI's "knowledge graph."

Traditional SEO vs. AI Overviews: A Strategic Comparison

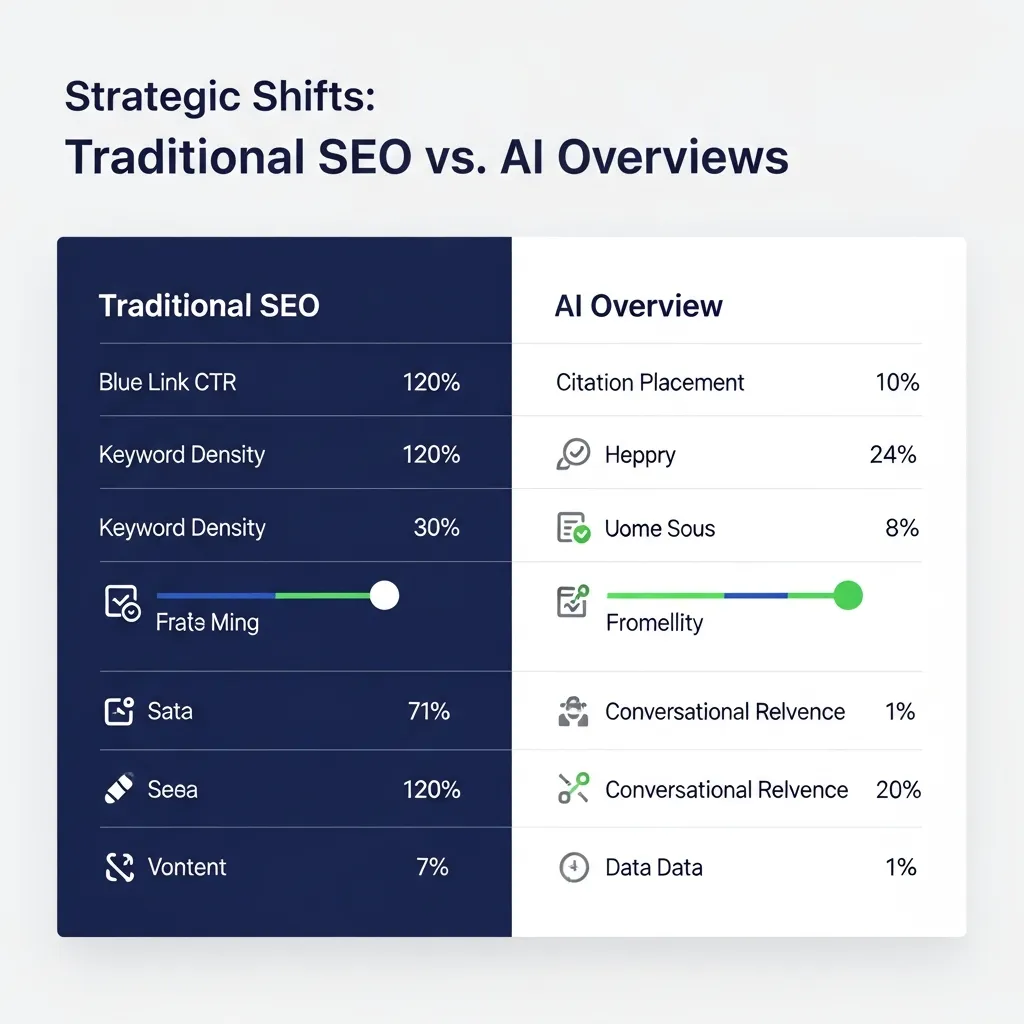

Traditional SEO focused heavily on keyword density and explicit matching for rankings. In contrast, AI Overviews demand a deep understanding of semantic relevance and user intent. While conventional SEO prioritizes driving click-through rates (CTR) to your site, the new paradigm often shifts the goal toward securing citation visibility within the AI Overview itself.

Neglecting foundational SEO principles for AI-specific tactics is a common mistake; if AI cannot easily parse your content, it will not be cited. Balancing both approaches is the cornerstone of a modern ai overviews strategy. Content that excels in semantic depth while maintaining strong technical SEO signals consistently performs better, ensuring both traditional discoverability and generative synthesizability.

Actionable Tactics to Combat Zero-Click Search Trends

To combat zero-click trends, target high-intent, long-tail queries that demand intricate explanations. While AI Overviews efficiently summarize basic information, they often struggle with complex analyses or novel insights, which compels users to engage more deeply with the source.

A common mistake is providing too comprehensive an answer in the introductory snippet, which removes the incentive to click. Instead, strategically create information gaps: offer enough value for an AI citation, but reserve full context, proprietary methodology, or detailed breakdowns for the main article. Prioritizing unique data and original research is a robust strategy, as it offers content that AI cannot easily replicate, compelling users to click for exclusive details.

Adapting to the Future of Search Discovery

The future demands a dual-track ai overviews strategy, blending traditional methods with Generative Engine Optimization (GEO). A common mistake is neglecting the human element; content must remain user-first, delivering value that AI can synthesize and humans appreciate. This balance is crucial for sustained authority.

Actively monitor Search Console updates for AI-specific metrics. Proactive engagement allows you to refine your approach and maintain visibility as the technology evolves. To begin, apply the GEO framework's "Direct Answer" method to your top 10 informational pages today to ensure they are ready for the next generation of search.

Frequently Asked Questions

What is an AI overviews strategy?

An AI overviews strategy is a set of SEO and content practices designed to optimize visibility within generative search results, focusing on semantic relevance, authority, and AI-friendly formatting.

What is Generative Engine Optimization (GEO)?

GEO is a framework that adapts traditional SEO for AI models. It involves structuring content for easy parsing by LLMs, using direct answers, and reinforcing credibility through E-E-A-T signals.

How can I reduce the impact of zero-click searches?

To combat zero-click trends, target complex, long-tail queries, provide unique data or original research, and create "information gaps" that encourage users to click through for full context.

Which schema types are best for AI Overviews?

FAQPage, HowTo, and Product schemas are highly effective, as they provide structured, machine-readable data that AI models can easily extract and synthesize into summaries.