The Evolution of Retrieval: Understanding the AI Search Shift

SEO professionals are currently navigating a fundamental shift in how search engines retrieve information. The era of simple keyword matching is rapidly giving way to complex AI-driven retrieval systems, where Large Language Models (LLMs) power generative experiences like AI Overviews and AI Mode. Traditional SEO strategies—once focused on keyword density and individual page optimization—are no longer sufficient to secure visibility in these advanced, AI-first results. As discussed in Google AI search strategy, a more holistic approach is now essential.

This article demystifies query fan-out, a core AI mechanism driving this evolution. Query fan-out describes how AI engines semantically expand an initial user query into a network of related sub-queries to gather a comprehensive understanding of intent. For content marketers, the value of query fan out optimization lies in recognizing that AI doesn't just look for a single answer; it explores many related facets. This necessitates content that establishes topical authority and moves beyond singular keywords to encompass entire content clusters.

What is Query Fan-Out? Defining the Technical Process

In the context of Large Language Models (LLMs) powering modern search, query fan-out is the process of intelligently expanding a single user prompt into multiple, distinct, intent-based sub-searches. Rather than relying on a direct keyword lookup, the LLM semantically analyzes the initial query to discern its underlying nuances, entities, and implied user needs. This decomposition generates a series of targeted sub-queries, each designed to explore a specific facet or related angle of the original request.

The primary technical goal of this mechanism is to cast a wide net across the web. By executing these diverse sub-searches, the AI system gathers a comprehensive range of diverse viewpoints and perspectives, significantly reducing potential information gaps. This multi-faceted retrieval is fundamental to constructing the rich, synthesized answers found in features like AI Overviews or AI Mode, ensuring the output is robust and well-rounded. Mastering this stage is essential for effective query fan out optimization, as it dictates the breadth and quality of the information the LLM ultimately processes.

How AI Engines Leverage Sub-Searches for Comprehensive Answers

AI engines employ AI sub-searches to deliver comprehensive and accurate answers. After decomposing a primary query, Large Language Models (LLMs) initiate multiple targeted sub-searches across diverse web sources. This process, a core component of query fan out optimization, is crucial for verifying facts, cross-referencing data, and gathering a broad spectrum of information to establish the response's topical authority.

Platforms like Google AI search and Perplexity AI utilize this fan-out approach to minimize hallucinations. By validating information against numerous trusted sources, they ensure that generated content is factually grounded, significantly reducing the risk of inaccurate or fabricated details.

Following these sub-searches, the LLM undergoes a sophisticated synthesis process to intelligently combine and distill verified insights from all sub-query results. This meticulous integration culminates in a single, cohesive AI overview or generated response, providing users with a holistic understanding of their initial complex query.

Strategic Content Frameworks for Query Fan Out Optimization

Optimizing content for query fan-out requires a deliberate shift from single-keyword targeting toward a holistic, topic-centric approach. This involves designing content that anticipates and satisfies the various sub-queries an AI search engine might generate to answer a user's initial prompt comprehensively. The goal is to establish deep topical authority by demonstrating expertise across an entire subject domain rather than focusing on isolated keywords.

The Query Tree Mapping Framework

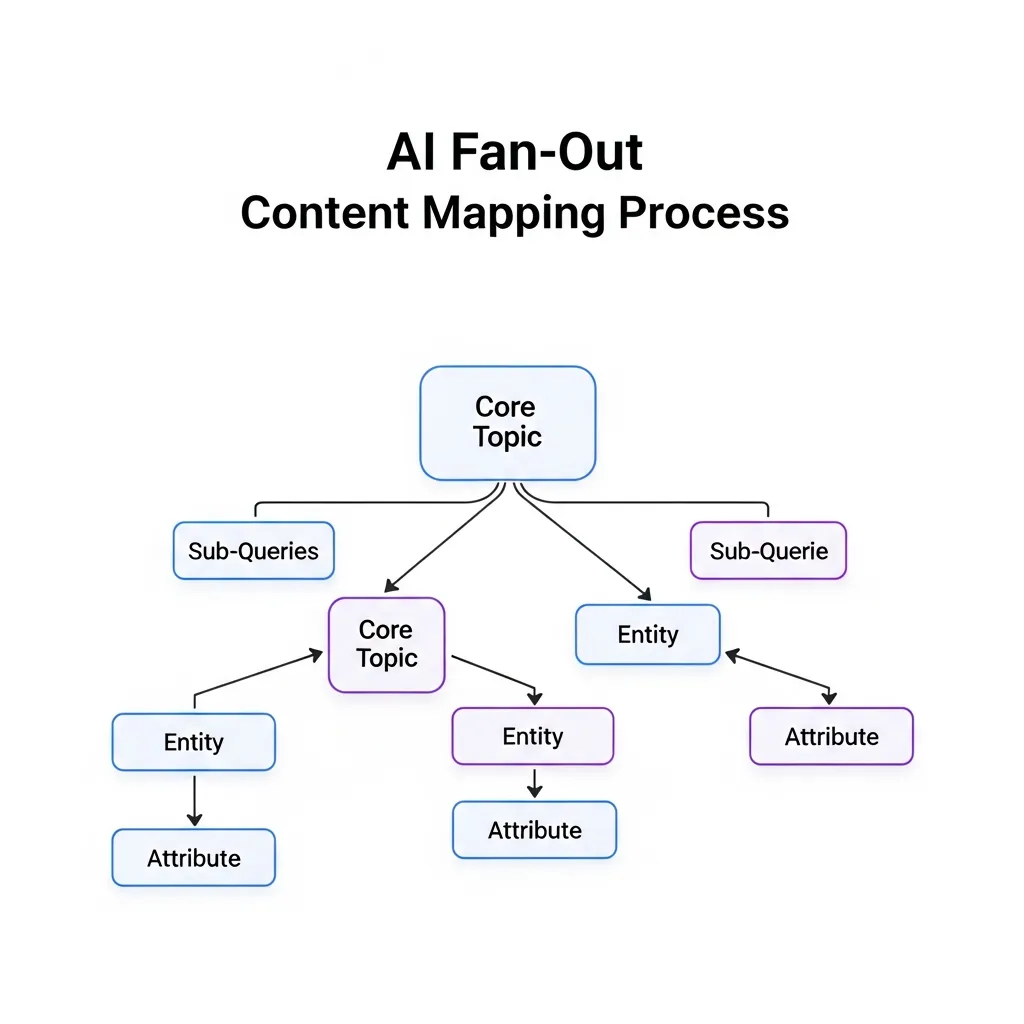

To effectively capture fan-out nodes, creators must first understand the "query tree" stemming from a primary topic. This involves identifying the facets, entities, and related questions an AI might explore. This framework visualizes the interconnectedness of information and guides content creation to cover these branches comprehensively.

The AI Fan-Out Content Mapping Process

Here is a structured approach to mapping your content for query fan-out:

- Core Topic Identification: Define your primary topic with precision. This serves as the trunk of your query tree (e.g., "Sustainable Urban Farming Techniques").

- Initial Sub-Query Brainstorming: List immediate, obvious sub-queries or user intents related to the core topic. Use the "who, what, when, where, why, and how" approach (e.g., "vertical farming benefits," "hydroponics vs. aeroponics," "urban garden design," "community agriculture initiatives").

- Entity Extraction & Expansion: Identify key entities within your primary topic and initial sub-queries. Research these entities to uncover their attributes, relationships, and common questions. Leverage tools that display "People Also Ask" or "Related Searches" (e.g., Entities: "Vertical farming," "Hydroponics," "Aeroponics," "Composting," "Permaculture." Expansion: "cost of vertical farms," "best plants for hydroponics," "benefits of composting in urban areas").

- Intent Categorization: Group identified sub-queries by search intent (informational, navigational, transactional, or commercial investigation). AI engines strive to satisfy diverse intents simultaneously.

- Competitor & AI Overview Analysis: Analyze competitor content ranking for your core topic and observe how AI Overviews or AI Mode responses address it. Identify which sub-topics they cover and which sources they cite to reveal gaps and opportunities.

- Content Cluster Development: Organize related sub-queries and entities into logical content clusters. Each cluster might form a comprehensive article, a series of posts, or dedicated sections within a pillar page. This creates a multi-path retrieval architecture, where multiple pieces of content collectively address the fan-out.

Semantic SEO and Entity-Based Writing

At the heart of optimizing for query fan-out are semantic SEO and entity-based writing. Instead of merely scattering keywords, content must demonstrate a deep understanding of the relationships between concepts and entities. This requires using a rich vocabulary of related terms, synonyms, and co-occurring phrases that naturally arise when discussing a topic in depth.

For example, an article on "electric vehicles" should inherently discuss "battery technology," "charging infrastructure," "range anxiety," "environmental impact," and "government incentives" as interconnected entities. This comprehensive coverage signals to Large Language Models (LLMs) that the content possesses genuine topical authority and can satisfy the diverse search intents that arise during a fan-out process.

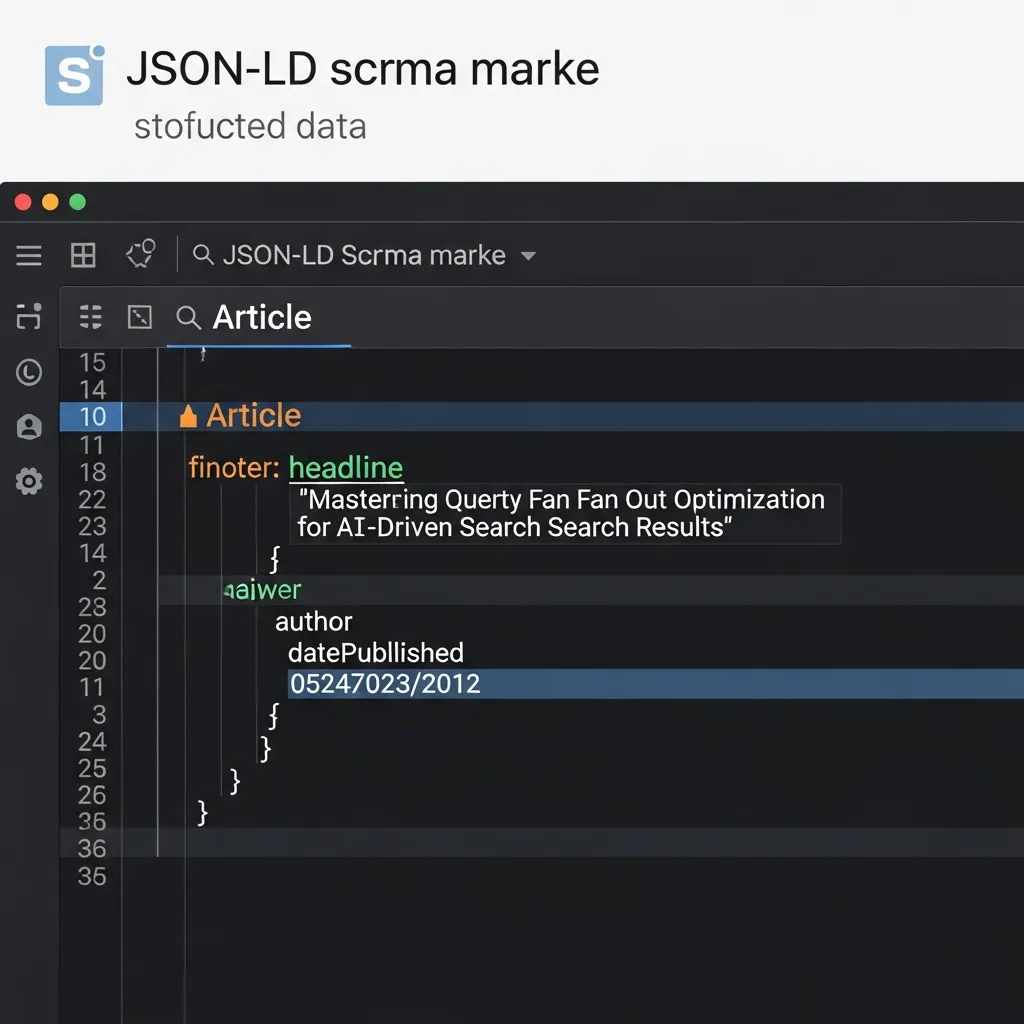

Structuring Data with Schema Markup

To facilitate AI extraction during query fan-out, content must be easily parseable. Schema markup (structured data) provides explicit signals to search engines about the meaning and relationships of content on a page. While not a direct ranking factor for traditional search, it significantly aids AI engines in understanding, extracting, and synthesizing information for AI-generated responses.

Implementing relevant schema types like Article, FAQPage, HowTo, Product, Recipe, or Event helps AI quickly identify key facts, steps, definitions, and answers. For instance, using FAQPage schema ensures that questions and answers are clearly delineated, making them prime candidates for direct inclusion in AI Overviews. Properly structured data helps AI understand the context and purpose of information, ensuring accurate retrieval and synthesis.

Pro Tip: Focus on structuring the unique, valuable information within your content. If you have a custom calculator or unique research findings, consider using

DatasetorWebPageschema with specific properties to highlight their significance to AI models.

Case Study Examples: Capturing Fan-Out Nodes

Consider a hypothetical content cluster centered on "Sustainable Home Energy." A well-optimized cluster would include a pillar page covering the broad topic, supported by satellite articles such as:

- "Solar Panel Installation Costs and ROI" (addressing commercial investigation and informational intent)

- "Choosing the Right Home Battery Storage System" (addressing product comparison and technical details)

- "DIY Home Energy Audit Steps" (addressing 'how-to' and practical application)

- "Government Rebates for Energy-Efficient Appliances" (addressing local/regional informational needs)

Each article within this cluster addresses a distinct sub-query while linking back to the main topic. When an AI receives a broad query like "How to make my home more energy-efficient?", it can fan out to these interconnected resources, extract relevant data points, and synthesize a multi-faceted answer. This draws on the collective authority of the entire cluster, demonstrating how a multi-path retrieval architecture successfully captures multiple fan-out nodes.

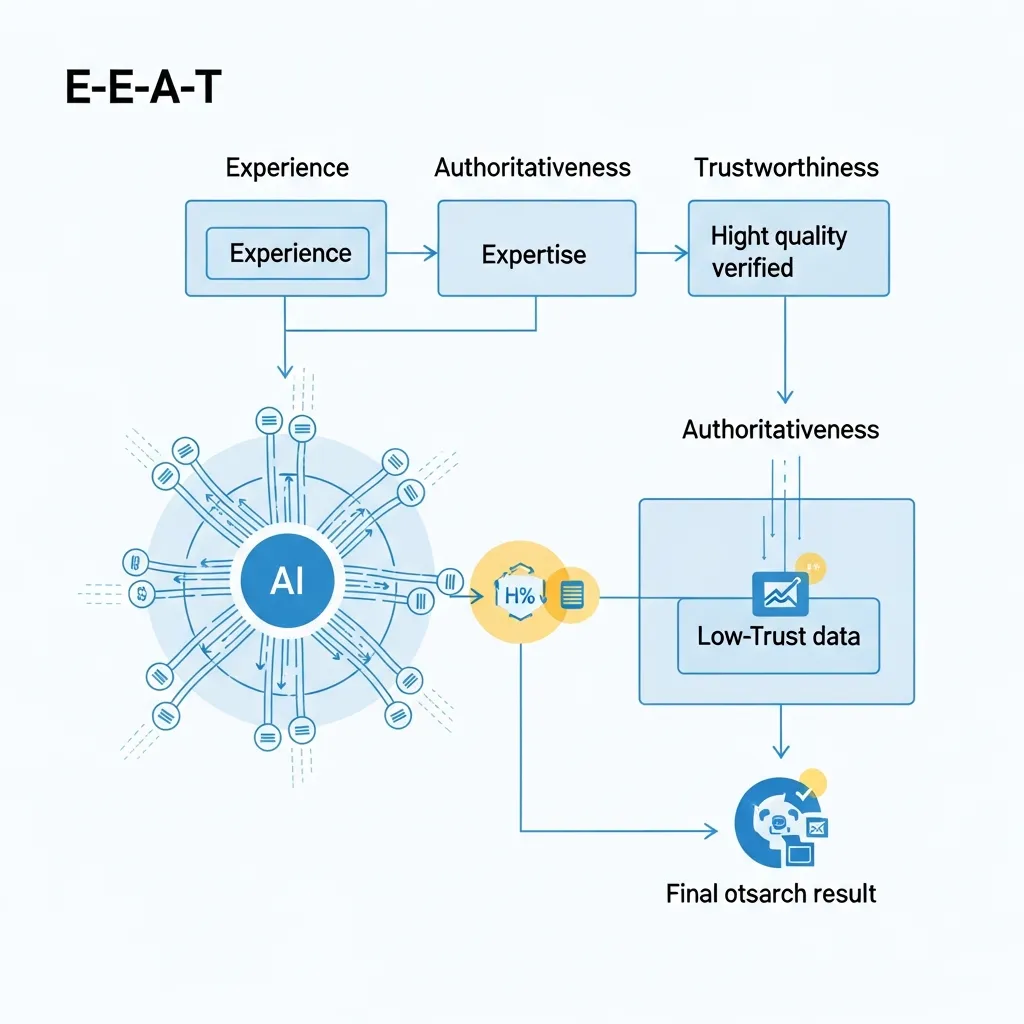

Leveraging E-E-A-T to Win AI Sub-Search Selection

AI search engines—particularly for AI Overviews and complex sub-queries—prioritize E-E-A-T (Experience, Expertise, Authoritativeness, Trustworthiness). This framework is critical for ensuring accuracy and mitigating misinformation, especially in high-stakes fields like health, finance, or technical instruction. Content that demonstrates robust E-E-A-T signals reliability to AI models, positioning it as a preferred source for generative responses.

Building topical authority is paramount for winning AI sub-search selection. This requires moving beyond superficial coverage to address a core topic and its related subtopics comprehensively. By creating a network of interconnected content that explores every facet of a subject, publishers establish themselves as definitive sources. This depth satisfies the AI's demand for holistic understanding during query fan out optimization.

In a generative landscape where information is easily synthesized, unique insights and first-hand experience serve as vital differentiators. Content offering original research, proprietary data, or authentic experiential perspectives provides value that AI cannot simply compile from existing public data. Such contributions elevate a source's expertise and trustworthiness, making it highly attractive for AI selection and allowing the content to stand out.

Common Mistakes to Avoid in Generative Engine Optimization

A prevalent error in Generative Engine Optimization is producing thin content that merely skims the surface, failing to address the nuanced sub-questions AI models generate. This often stems from a lack of query fan out optimization, where creators rely on outdated keyword over-optimization at the expense of the logical flow and semantic depth necessary for comprehensive AI-powered answers. Such fragmented content fails to establish genuine topical authority. Critically, many also neglect the user's primary pain point, prioritizing broad yet superficial coverage over directly resolving the intricate problems and diverse intents AI users present. Effective optimization demands a profound understanding of user needs and the creation of content that thoroughly resolves each anticipated sub-query.

Future-Proofing Your Content for an AI-First World

Query fan out optimization represents the future of information retrieval, as AI engines increasingly synthesize answers from a diverse range of sub-queries. To thrive in this landscape, content must demonstrate profound topical authority and be expert-led, comprehensively addressing the full spectrum of user intents. Start optimizing your content now to ensure exhaustive coverage across all potential sub-queries.

Frequently Asked Questions

What is query fan-out in AI search?

Query fan-out is a process where AI engines expand a single user query into multiple related sub-queries to better understand intent and gather a comprehensive set of information from various sources.

Why is query fan out optimization important for SEO?

It is essential because modern AI search engines no longer rely on simple keyword matching. Optimizing for fan-out ensures your content addresses the semantic sub-topics and facets that AI engines explore to generate answers.

How can I implement query fan out optimization?

You can implement it by using the Query Tree Mapping framework, focusing on semantic SEO and entity-based writing, establishing topical authority through content clusters, and using structured data.

What role does E-E-A-T play in query fan-out?

E-E-A-T is critical because AI engines prioritize expert-led, authoritative content when selecting which sources to synthesize into their final expanded query results.