The Evolution of Evaluation in Retrieval-Augmented Generation

The era of subjective "vibe checks" for RAG outputs is over. For production-grade AI, rigorous and quantifiable evaluation is now indispensable. Field observations confirm that without precise metrics, inconsistencies quickly undermine user trust and system reliability. Evaluating a RAG system's multi-stage pipeline—from retrieval to generation—presents unique challenges. For instance, a system might retrieve irrelevant documents, leading directly to a hallucinated response. Understanding these nuances is vital for measuring RAG success. For a comprehensive overview of how this impacts visibility, see RAG SEO strategies.

Key challenges include:

- Assessing component-level performance.

- Measuring overall end-to-end quality.

The Dual-Component Architecture: Why Component-Level Evaluation Matters

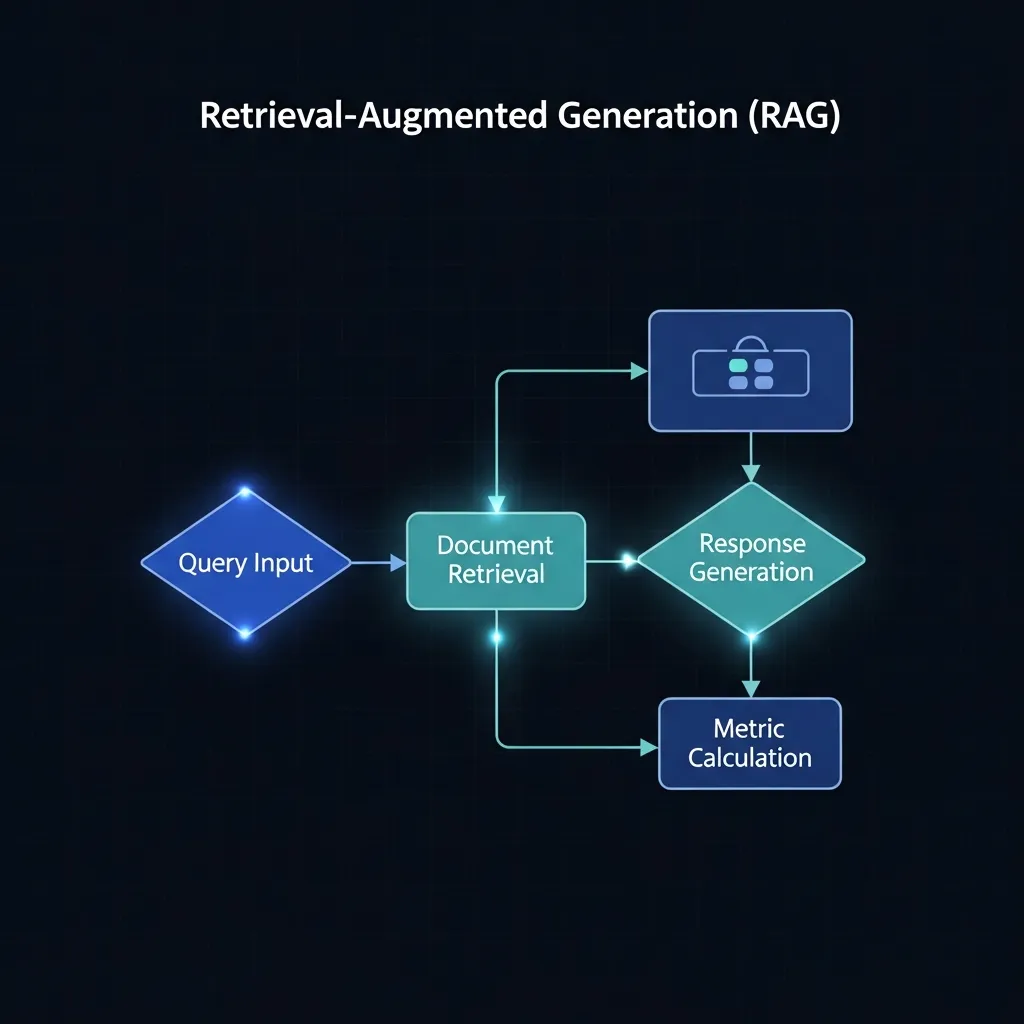

The architecture of RAG systems is fundamentally divided into a retrieval component and a generation component. Effective evaluation necessitates distinguishing between a retrieval failure, where irrelevant or insufficient context is fetched, and a generation failure, where the LLM struggles to produce a coherent answer even with high-quality input.

Practical experience shows that poor retrieval frequently leads to cascading errors. If the context provided by retrieval is substandard or misaligned, the subsequent generation stage is inherently compromised, irrespective of the LLM's capabilities. This dependency highlights the critical importance of component-level evaluation. Isolated testing of each module allows ML engineers to precisely identify the root cause of an issue, significantly accelerating debugging efforts and enabling more focused system improvements.

Essential Metrics for Retrieval and Generation Performance

Having established the necessity of component-level evaluation, selecting the right metrics is the cornerstone of measuring RAG success effectively. A robust framework blends quantitative measures for both retrieval and generation, reflecting the intricate interplay between the query, the retrieved context, and the final response—what we term the RAG Triad.

Evaluating Retrieval Accuracy

Effective RAG relies on fetching relevant information. Key metrics include:

- Precision@K: Measures the proportion of relevant documents within the top K retrieved results. This is critical when only a few highly relevant documents suffice, reflecting the user's immediate need for accurate, top-ranked information. Practical experience shows high Precision@1 or Precision@3 is paramount for many RAG applications.

- Recall: Quantifies the proportion of all relevant documents in the corpus successfully retrieved. While Precision@K focuses on top results, Recall ensures comprehensive coverage, which is vital where exhaustiveness is preferred.

- Mean Reciprocal Rank (MRR): Evaluates ranking quality by scoring higher for earlier placement of the first relevant document. This is useful for single-answer queries where the position of the first correct answer is highly valued.

- Normalized Discounted Cumulative Gain (nDCG): A sophisticated metric accounting for graded relevance and penalizing relevant items lower in the results. nDCG provides a nuanced view of ranking quality suitable for complex queries.

Assessing Generation Quality

Once context is retrieved, the LLM’s ability to synthesize a coherent, accurate, and relevant answer comes under scrutiny. The RAG Triad of Query, Context, and Response guides this assessment:

- Faithfulness (Groundedness): Assesses whether the generated answer is factually supported by the retrieved context, guarding against hallucinations. Field observations indicate this is often the most challenging metric to optimize.

- Answer Relevance: Measures how directly and completely the generated answer addresses the user's original query, independent of the context. An answer might be faithful but irrelevant if the context was poor.

- Context Precision: Evaluates how much of the retrieved context was actually useful. This identifies if retrieval provides noisy or irrelevant information, impacting LLM processing, latency, and cost.

Beyond Lexical Match: Semantic Metrics

Traditional NLP metrics like BLEU and ROUGE rely on lexical overlap, often falling short for evaluating generated text in RAG systems. They struggle to capture semantic equivalence. Semantic metrics like BERTScore offer a more robust alternative, leveraging contextual embeddings to compare semantic similarity between generated and reference answers, providing a more human-like assessment.

Scaling Evaluation with Synthetic Data

Manually creating comprehensive, high-quality test sets for RAG evaluation is resource-intensive. Synthetic data generation becomes invaluable here. Programmatically generating diverse queries, contexts, and even reference answers creates scalable, consistent benchmarks. This allows rapid iteration and robust performance tracking without human annotation bottlenecks.

The RAG Quality Assurance Protocol

To systematically evaluate the RAG Triad, consider this protocol:

- Query-Context Alignment: Verify retrieved context relevance and comprehensiveness (Precision@K, Recall, MRR, nDCG).

- Context-Response Grounding: Confirm the response is supported by context, preventing hallucinations (Faithfulness).

- Query-Response Utility: Ensure the response directly addresses the original query (Answer Relevance).

- Context Efficiency: Identify and reduce noise in retrieved context (Context Precision).

- Semantic Coherence: Assess overall semantic quality against a reference (BERTScore).

This structured approach provides a holistic view, pinpointing optimization areas in both retrieval and generation stages.

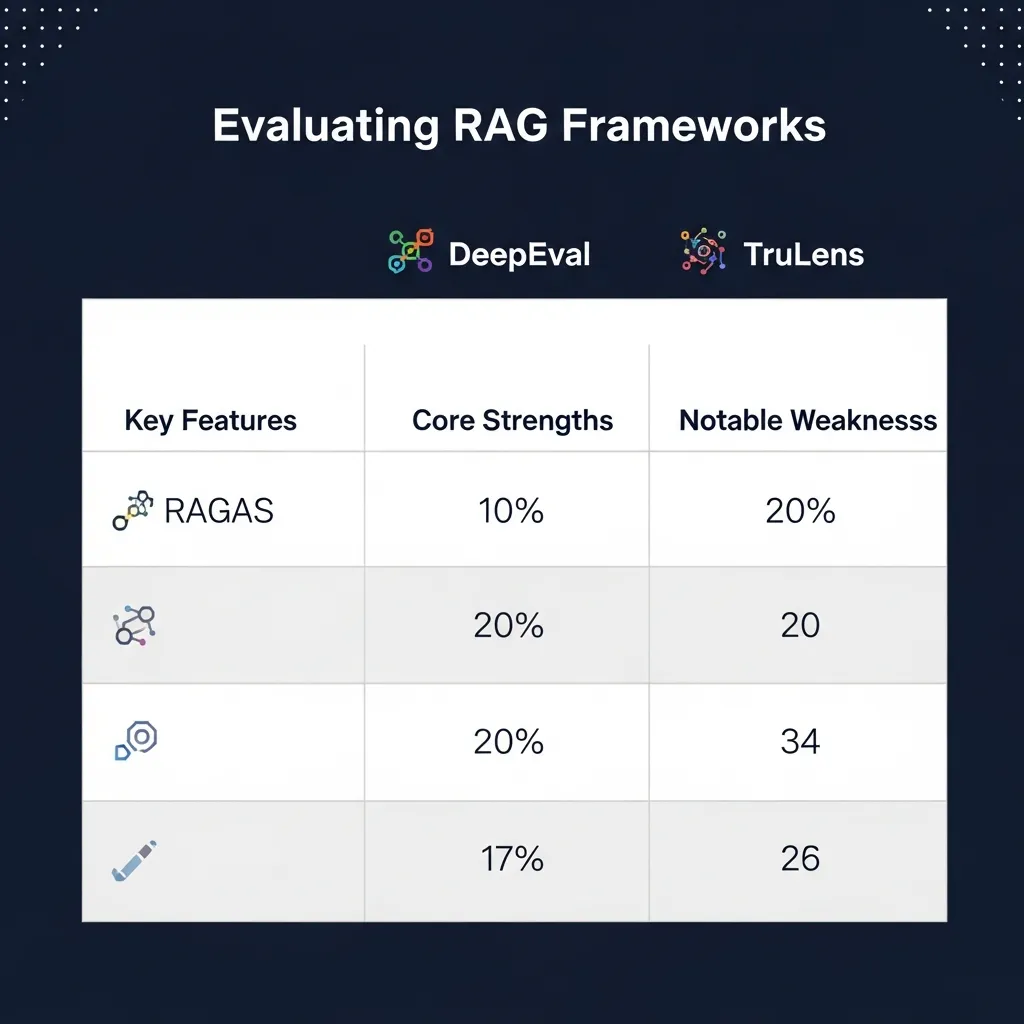

Comparing LLM-as-a-Judge Frameworks: RAGAS, DeepEval, and TruLens

LLM-as-a-Judge frameworks automate RAG evaluation, moving beyond labor-intensive manual checks. RAGAS provides rapid, automated metrics like faithfulness and answer relevance, ideal for quick baselining during development. Its strength is speed, though LLM judgment can introduce inconsistencies, requiring careful validation.

DeepEval offers a unit testing approach for LLMs, integrating into CI/CD pipelines. It enables writing specific test cases to ensure predictable RAG system behavior, powerful for enforcing quality gates. However, defining comprehensive test suites demands initial effort.

TruLens focuses on observability and feedback loops, tracing RAG pipelines end-to-end. It allows inspecting intermediate steps, debugging production issues, and integrating human feedback. In my experience, while RAGAS offers a snapshot, TruLens is indispensable for understanding why performance fluctuates in live environments—a common challenge.

Selecting the right tool depends on project maturity. RAGAS suits rapid prototyping. DeepEval is for robust, test-driven development. TruLens is paramount for production monitoring. Many projects combine these: RAGAS for initial validation and TruLens for ongoing operational insights.

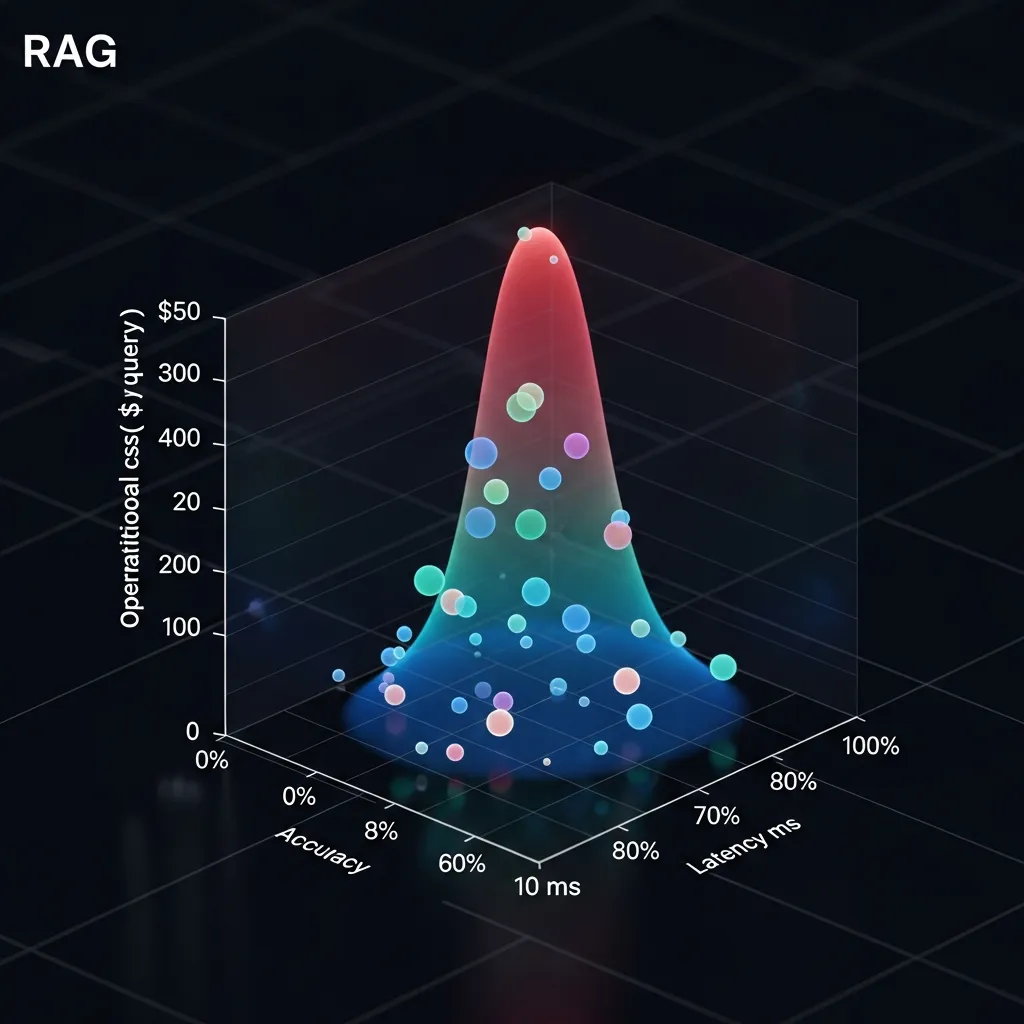

The Trade-off Matrix: Balancing Accuracy, Latency, and Cost

Ultimately, measuring RAG success requires balancing accuracy, latency, and cost. High-end LLM for evaluation incur significant hidden costs; a common mistake I've encountered is underestimating cumulative API expenses for extensive automated testing, which can rapidly inflate budgets.

Complex retrieval strategies, like multi-stage ranking, directly impact system latency. My experience shows that while these promise accuracy, they often introduce unacceptable delays for real-time production. Finding the 'sweet spot' means defining acceptable latency and cost thresholds before optimizing for accuracy.

In my view, the most effective approach prioritizes accuracy during initial development. As the RAG system matures, the focus shifts to balancing this with latency and cost-efficiency for a sustainable, performant production solution.

Maintaining Quality in Dynamic Environments: Production Monitoring Strategies

Maintaining RAG quality in dynamic production environments requires constant vigilance. As knowledge bases evolve, continuous monitoring is crucial to detect performance drift, where retrieval relevance or generation quality degrades. Implementing a robust human-in-the-loop (HITL) feedback mechanism is paramount; it captures nuanced failures and provides expert annotations for guiding model fine-tuning and ensuring continuous improvement.

For real-time quality assurance, practical experience shows that strategies like confidence scoring based on retrieved context coherence or anomaly detection in generated responses can effectively flag potential hallucinations for immediate human review. This integrated approach ensures sustained accuracy and trustworthiness.

Avoiding Common Evaluation Pitfalls

Robust RAG evaluation demands avoiding common pitfalls. Over-relying on synthetic data is a significant danger; in my experience, models optimized solely on controlled datasets often struggle with the nuanced complexity of real user interactions.

Similarly, while a high BLEU score indicates fluency, it doesn't guarantee factual accuracy or faithfulness to the retrieved context—a common mistake I've observed is equating lexical similarity with truthfulness. Critically, ignoring the user context and intent can result in systems providing technically correct but ultimately unhelpful responses, missing the user's true underlying need.

Building a Sustainable Framework for Long-Term Success

Sustaining long-term performance requires a standardized approach to measuring RAG success. Adopting standardized metrics is paramount for objective comparison. In my experience, relying solely on intuition often leads to suboptimal performance; instead, consistently iterate based on real-world data to refine your system. Implement a feedback loop for your RAG system today to ensure your AI continues to deliver value.

Frequently Asked Questions

What is the RAG Triad?

The RAG Triad consists of the Query, the retrieved Context, and the generated Response. Evaluating the relationships between these three elements is essential for measuring RAG success.

How do you measure retrieval performance in RAG?

Retrieval performance is typically measured using metrics like Precision@K, Recall, Mean Reciprocal Rank (MRR), and Normalized Discounted Cumulative Gain (nDCG).

What are LLM-as-a-judge frameworks?

Frameworks like RAGAS, DeepEval, and TruLens use Large Language Models to automate the evaluation of RAG outputs, providing scores for faithfulness, relevance, and more.

Why is component-level evaluation important for RAG?

It allows developers to identify whether a failure occurred during the retrieval stage (wrong information fetched) or the generation stage (wrong answer produced), making debugging much faster.