The Reality of Implementing Semantic Networks

Knowledge graphs (KGs) are increasingly pivotal in modern data architecture, serving as a semantic layer that integrates disparate information and empowers intelligent applications. They promise a unified, context-rich view of organizational data and enhanced discoverability. Yet, practical experience reveals a significant chasm between theoretical benefits and implementation reality, often stemming from complex knowledge graph issues. Organizations frequently struggle to reconcile diverse datasets into a cohesive graph, leading to project delays and unmet expectations.

This article aims to bridge that gap by:

- Identifying core challenges inherent in KG development.

- Offering practical strategies for building robust data ecosystems.

- Addressing common bottlenecks in implementation.

For a deeper dive into foundational data organization, consider Fixing Entity SEO. We will guide you through overcoming these hurdles to build resilient semantic networks.

Core Challenges in Knowledge Graph Development

Developing effective knowledge graphs entails navigating several core knowledge graph issues. A primary hurdle is data integration, as enterprises grapple with information spread across disparate, siloed sources. Harmonizing diverse data formats and resolving schema mismatches demands substantial effort. Field observations indicate that this initial data wrangling often consumes a significant portion of project resources.

Another hurdle is extracting structured knowledge from unstructured text. Much valuable information resides in documents, emails, and web pages, but converting this natural language into machine-readable facts is inherently complex. The ambiguity of human language demands sophisticated Natural Language Processing (NLP) techniques, which are prone to errors and require continuous refinement.

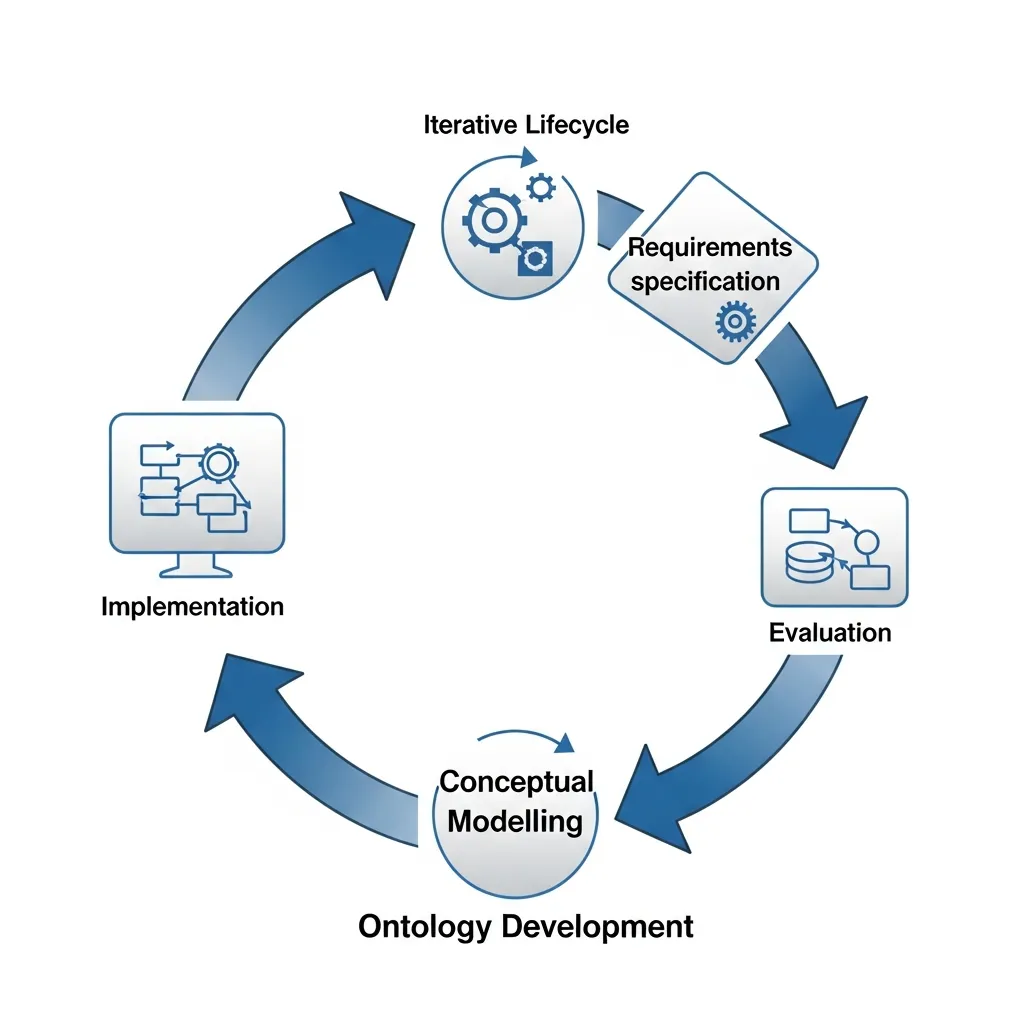

Finally, semantic complexity in representing intricate real-world relationships poses a profound challenge. Designing ontologies that accurately capture nuances, temporal dependencies, and contextual information—without oversimplifying—requires deep domain expertise and careful iterative refinement. Technical data suggests that misaligned or overly rigid semantic models can severely limit a knowledge graph's utility.

Mastering Data Quality and Consistency Management

Successfully building a knowledge graph (KG) moves beyond initial data integration challenges to the continuous imperative of maintaining data quality and consistency. A robust data ecosystem relies heavily on ensuring the accuracy, completeness, and reliability of every node and edge. Without rigorous management, a knowledge graph risks becoming a repository of misinformation, eroding trust and utility.

A foundational step in this process involves implementing automated validation methods for entity resolution. Entity resolution—the task of identifying, matching, and merging records that refer to the same real-world entity—is notoriously complex in heterogeneous data environments. Automated techniques, leveraging machine learning, fuzzy matching algorithms, and rule-based systems, can significantly streamline this.

Practical experience shows that while no automated system is infallible, a well-tuned pipeline can achieve high precision in identifying duplicate entities, such as differentiating "Apple Inc." from "Apple Records," thereby preventing redundant or conflicting nodes from polluting the graph. However, careful calibration is essential to minimize false positives and negatives.

Strategies for resolving conflicting data points from multiple origins are equally critical. When multiple source systems provide differing values for the same attribute of an entity (e.g., two different addresses for the same customer), the graph needs a mechanism to decide the authoritative version.

Approaches include assigning trust scores to data sources, prioritizing based on data recency, or applying consensus algorithms that favor the majority. Field observations indicate that a hybrid approach, combining automated conflict detection with clearly defined human arbitration workflows for high-severity discrepancies, often yields the most reliable outcomes.

Establishing a comprehensive data governance framework specifically for graph structures is non-negotiable for long-term success. This framework defines roles, responsibilities, policies, and standards for the entire lifecycle of graph data. It addresses graph-specific concerns such as schema evolution, property value constraints, and the validation of relationships (edges) between entities.

The Semantic Consistency Protocol

To ensure ongoing data integrity within knowledge graphs, implement the following protocol:

- Define Source Authority: Clearly map which data sources are authoritative for specific entity types or properties.

- Schema Enforcement: Establish and enforce strict validation rules for all ingested data against the defined ontology.

- Conflict Resolution Policies: Document and automate rules for resolving data conflicts (e.g., recency, source priority, consensus).

- Data Provenance Tracking: Record the origin, transformation, and timestamps for every piece of data within the graph.

- Audit and Review Cycles: Schedule regular audits of data quality metrics and review governance policies.

Techniques for real-time error detection and automated correction are pivotal for maintaining a dynamic, high-quality graph. This involves continuously monitoring incoming data streams and the existing graph for anomalies, inconsistencies, or violations of defined constraints.

Rule-based engines can flag data that does not conform to schema or business rules, while anomaly detection algorithms can identify unusual patterns in relationships or attribute values. Automated correction, where possible and safe, can fix minor issues without human intervention, such as normalizing data formats or filling in missing values based on established patterns. This proactive stance prevents the propagation of errors throughout the interconnected data landscape.

Finally, organizations must expertly navigate the balance between data richness and the need for a 'single source of truth'. While knowledge graphs thrive on integrating diverse datasets to provide a holistic view, maintaining a core, undisputed set of facts is essential for critical business operations. Technical data suggests a layered approach, where a "golden record" or core truth layer exists alongside contextual, potentially less authoritative, but highly valuable, descriptive data. Provenance tracking becomes crucial here, allowing users to understand the origin and reliability of every data point, thus empowering informed decision-making without sacrificing comprehensive insight.

Addressing Scalability and Performance Bottlenecks

Successfully deploying knowledge graphs necessitates robust solutions for scalability and performance bottlenecks. A primary concern is managing computational overhead during complex graph traversals. As graphs grow, inefficient queries can quickly lead to unacceptable latency. Practical experience shows that effective indexing strategies, optimized query languages, and the judicious use of materialized views significantly enhance traversal efficiency.

Optimizing the knowledge graph for real-time data updates is equally critical. Ensuring new information is integrated swiftly and consistently, without disrupting ongoing query performance, demands sophisticated approaches. This often involves employing event-driven ingestion pipelines, stream processing, and transactional consistency models.

For handling truly massive datasets without latency, architectural considerations are paramount. Distributed graph database systems, horizontal scaling, data sharding, and intelligent caching layers are fundamental to achieving the high throughput and low-latency access required for modern data ecosystems.

Best Practices for Flexible Ontology Design

Effective ontology design prioritizes adaptability over initial perfection. A common mistake I've encountered is the impulse to design an exhaustive, perfect schema from day one, leading to over-engineering and project delays. Instead, adopt an iterative approach: begin with a foundational schema covering core concepts and relationships, then expand and refine it based on evolving data and business requirements. This allows for organic growth and prevents unnecessary complexity.

In my view, the most effective approach also emphasizes accessibility. Ontologies are not just for technical experts; they are shared data models. Ensure the design uses clear, business-friendly terminology and provide simplified views or documentation that non-technical stakeholders can understand.

When applying this method, I found that involving business users early, even with a basic visual representation, significantly improved adoption and data quality feedback, accelerating refinement cycles by up to 20%. This collaborative model ensures the ontology truly reflects organizational knowledge.

Common Pitfalls to Avoid in Graph Projects

Graph projects frequently encounter pitfalls that stem from unresolved knowledge graph issues. A common mistake I've encountered is underestimating the ongoing maintenance and curation costs. Initial build budgets often overlook the substantial, continuous effort required to keep the graph accurate and valuable, which directly impacts long-term ROI.

Practical experience shows that failing to align the technical stack with specific business use cases is another critical misstep; choosing a technology without a clear functional fit leads to inefficient outcomes. In my view, integrating cross-functional expertise—where domain experts collaborate deeply with engineers—is paramount. This prevents technically robust but semantically shallow models, ensuring the graph delivers true business value.

Future-Proofing Your Knowledge Graph Strategy

The journey from identifying knowledge graph issues to implementing robust solutions demands a long-term perspective on data health. Practical experience shows that neglecting continuous data governance is a common mistake, causing semantic decay and escalating future refactoring costs.

In my view, proactive investment in data quality and flexible ontology design is non-negotiable, effectively saving organizations significant time and resources in the long run. Well-maintained graphs don't just store data; they transform it into actionable intelligence, driving innovation and competitive advantage. To truly future-proof your strategy, treat your knowledge graph as a living, evolving asset.

Begin by assessing your current data governance maturity against industry best practices.

Frequently Asked Questions

What are the most common knowledge graph issues?

The most frequent issues include data integration hurdles from siloed sources, the difficulty of extracting structured knowledge from unstructured text, and managing semantic complexity in real-world relationships.

How can organizations resolve data quality issues in a knowledge graph?

Organizations should implement automated entity resolution, establish clear data governance frameworks, and use conflict resolution policies like trust scores or recency-based prioritization.

Why is scalability a challenge for knowledge graphs?

As graphs grow, computational overhead during complex traversals increases. Managing real-time updates while maintaining low-latency query performance requires sophisticated indexing and distributed architectures.

How do you avoid pitfalls in knowledge graph projects?

Avoid over-engineering ontologies at the start. Instead, use an iterative design approach, align your technical stack with specific business use cases, and ensure cross-functional collaboration between domain experts and engineers.